Neural Network from Scratch

It’s been a while since my last machine learning project: implementing a decision tree in Julia. This time I wanted to take a closer look at neural networks. I was recently shown an amazing book 'Neural Networks and Deep Learning' by Michael Nielson. He does a great jobs distilling the basics to a point where his explanations become intuitive rather than informative. I will not be able to explain anything as well as he does so please check out his book.

The most basic neural networks are, as it turns out, surprisingly simple. It is possible to derive methods for building and training neural networks using only basic linear algebra and calculus. Neural networks have also been around for quite some time but it wasn’t until backpropagation was suggested as a way of training networks in the 70's that they really took off. The complexity of them stems somewhat from the sheer size of networks. Modern computer hardware and new scientific computing methods were required for neural networks to reach the popularity they have today.

Backpropagation is the key to training neural networks. Essentially, backpropagation takes the error at the output of a network and updates weights, within the network, based on how much they contributed to that error. By calculating the error from a sample and adjusting the weights accordingly over many, many iterations the network can be trained.

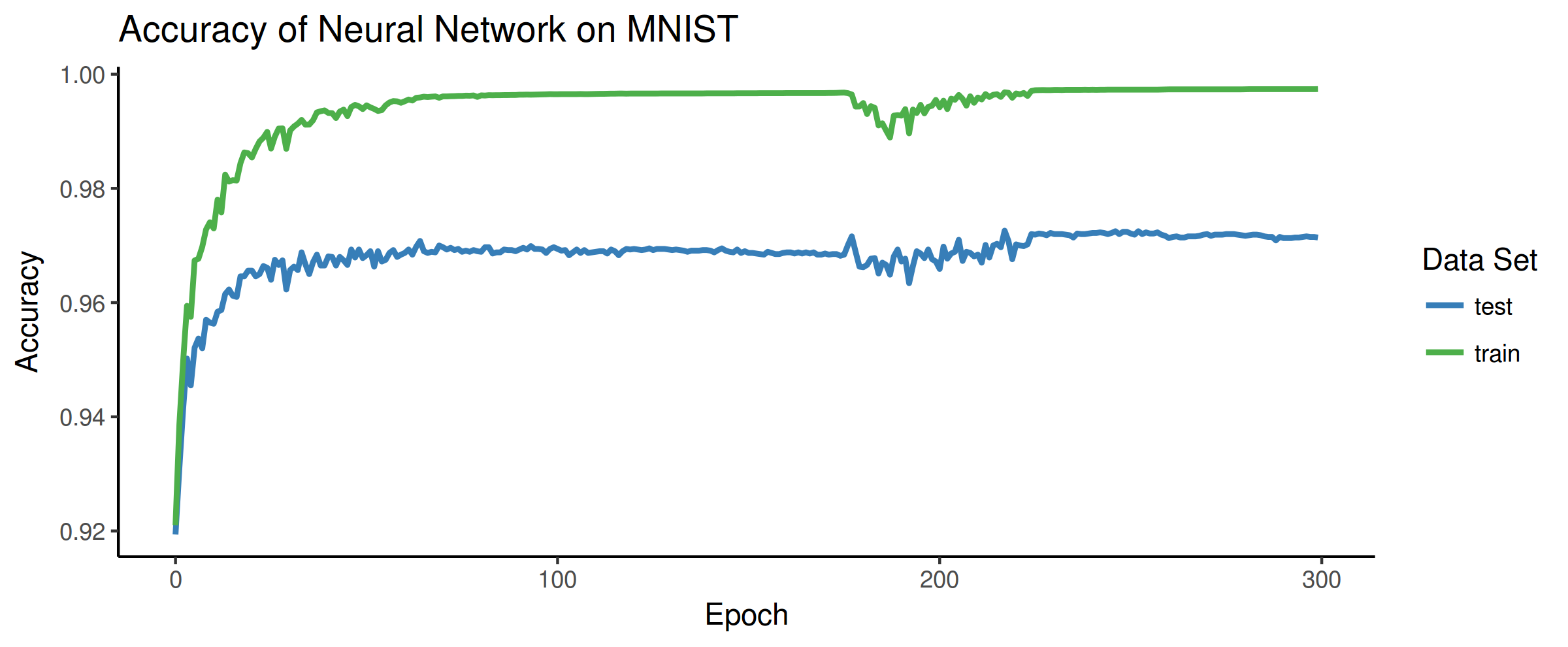

So in keeping with my previous project, I implemented a basic backpropogation algorithm in C for training on the popular MNIST dataset. I used a combination of the GNU Scientific Library and OpenBLAS for all the heavy number crunching. For the network itself I went with 2 hidden layers (4 total, including input and output layers) of 100 and 30 neurons. Below is the result after training on 50,000 images:

The green shows accuracy on training data and the blue shows the neural networks accuracy on a separate set of testing data. The x-axis shows the number of epochs, or the number of times the backpropagation went through all the training data and updated the network. Interestingly, after about 100 epochs the accuracy on the test data starts to decrease slightly. This is a sign that the network was overfitting to the training data. However, after around 180 epochs there is some disruption which ended up increasing the accuracy on both the training and testing data sets. Overall the accuracy was 99.74% and 97.14% on the training and testing data respectively.

As a final test, I got my lovely wife to draw any number on the computer (she chose '4'). I then fed this into the neural network to get see if it could identify what she wrote:

Clearly there is something to these neural networks after-all.

Thank you for reading.

Please check out Michael's book if you want to know more about neural networks. Also check out the code I wrote for this network on my Github.

Weekend Project - Cinnamon DE Applet

I’ve recently switched from using Xfce as my main desktop environment to Cinnamon. There are pluses and minuses to each but, so far, I am enjoying Cinnamon quite a lot. I wanted to personalize my desktop a little bit, beyond just changing the theme, and thought that making an applet would be a fun way to do this.

My favorite website to view Halo statistics stopped working recently. So my idea was to create a tiny applet where I could view current arena rankings for any player. And so 'minihalostats' was born over this past weekend.

The Cinnamon applet tutorial is pretty bad, to say the least. Not only is it outdated, but the code given in the tutorial won’t even run! There are also no links to further documentation or any additional reading. I ended up learning mostly from looking at the code of other people’s applets. The Cinnamon Spices Github repo was an excellent source for this.

Even given the frustrating lack of documentation I enjoyed the process and even learned a little bit of Javascript.

Code for 'minihalostats' is up on my Github.

Webbased Digital Picture Frame

My wife and I moved to Germany from Canada some time ago and every once-in-a-while we get a little homesick. So I wanted to do a project that can help a little bit in this area.

I wrote a webpage where my family can upload pictures and a short message, if they want. The pictures are sent directly to my home server via an http post request. The request is parsed and the metadata is stored in an SQLite database, while the pictures themselves are stored in a directory. In the interest of security, I made sure that this directory is not accessible outside of my personal network. My sister does not want pictures of her children on the internet (a good decision in my opinion) so this seemed like a good option to accommodate that.

To display the pictures, a CGI script queries the SQLite database for a random image and uses it as the background in webpage. This is convenient because this allows me easily super-impose text using a bit of CSS. After all images have been displayed once, it resets and starts the cycle over again. Chromium is set up on the Raspberry Pi to auto-refresh the page every minute with a new picture.

For the display, I used the official Raspberry Pi touchscreen. The quality of the picture is, honestly, pretty bad and I would recommend shopping around for a nicer quality display if this project interests you.

All-in-all I’m quite happy with how it turned out and my wife definitely enjoys seeing new pictures of our family back home.

The code has also been uploaded to my Github.

Canadian 2016 Census - Population and Dwellings

View everything here

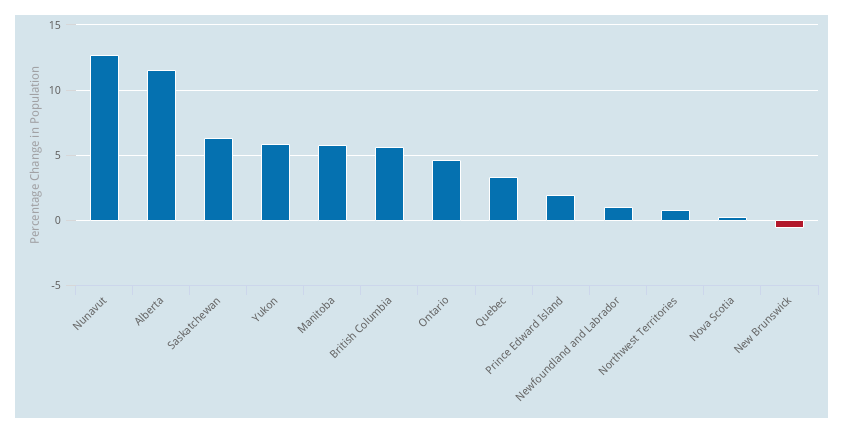

About a month ago, Statistics Canada finally started releasing summary statistics for the 2016 census. The long-form census was re-introduced last year so over the course of this year there should be lots of interesting data to look through. As of right now, only information on population and dwelling counts has been released with age and sex demographics scheduled for the beginning of May 2017.

I wanted to play around with a couple new tools like leaflet and highcharts and the census population data was the perfect test dataset. Leaflet is an awesome mapping library that feels really snappy in a browser and the R wrapper is incredibly simple to use. I definitely recommend it for any type of geographic visualizations. Flexdashboard was used to create a single-page html file that I then hosted on my webserver.

I don't have much to say about the data since I'm not really in a position to make any kind of conclusions. It's mostly just interesting to see how things have changed in Canada over the past 5 years.

haloR - A New R Halo API Wrapper

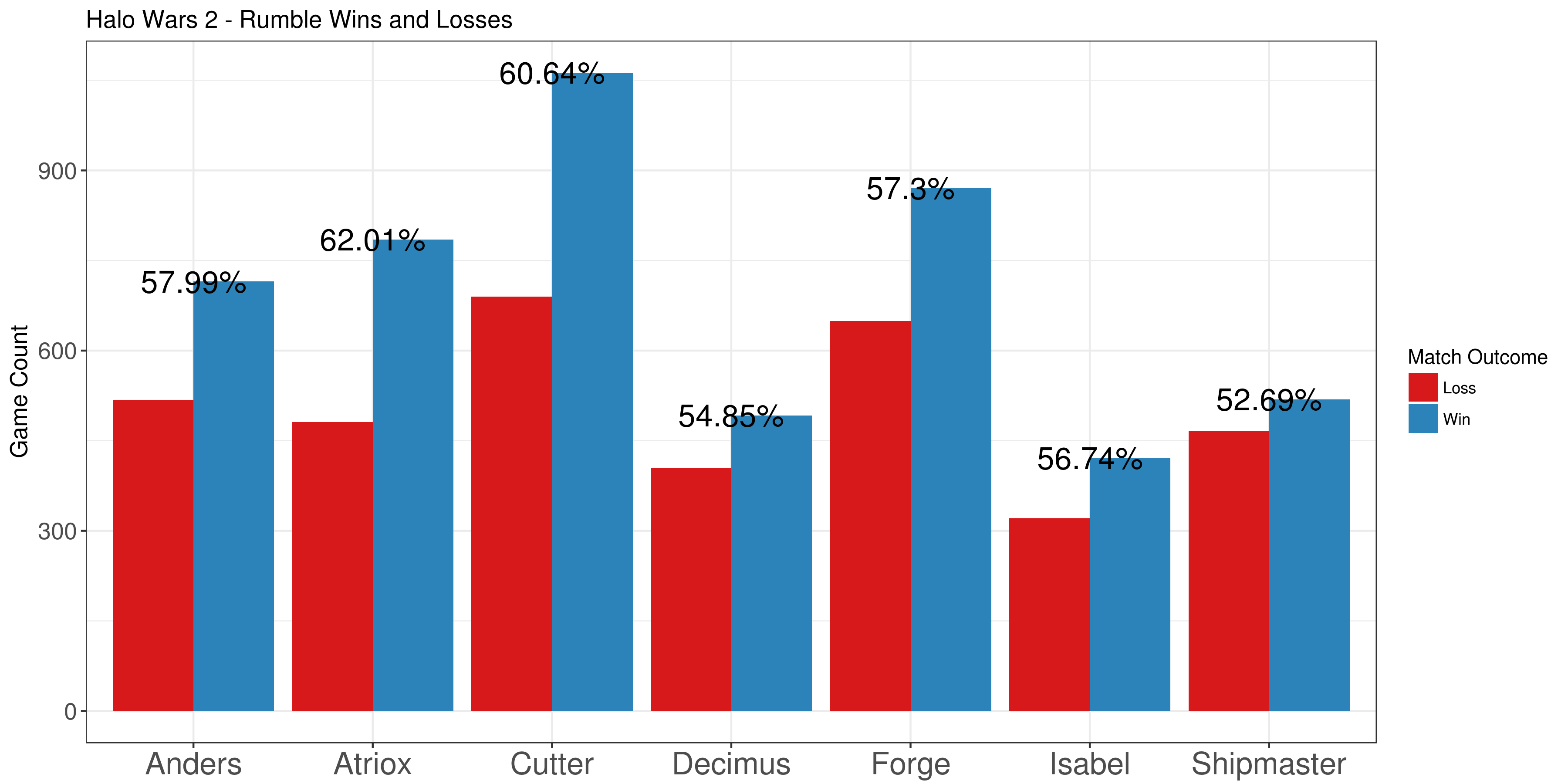

Earlier this week Microsoft released Halo Wars 2, a followup to the original that has somewhat of a cult following. In contrast to the mainline Halo titles, Halo Wars is a real-time strategy game. I've played through the Halo Wars 2 campaign and dipped my feet a little bit into the multiplayer. It isn't really for me (I prefer FPS) but it was still an enjoyable experience.

Similar to Halo 5, Microsoft and 343i have decided to open up much of the game details to the public through their Halo API. I really enjoyed digging through Halo 5 data and it was a big engagement point for my interest in the game. Kudos to MS/343i for the work they do on this stuff.

Even though I don't plan to continue playing the game, I decided to update my Halo R API wrapper to now include functions to easily get data from the Halo Wars 2 endpoints. Installation instructions and a tiny example can be found on the haloR Github.

Before using it, I suggest reading through the documentation provided my 343i since the documentation for my package is kind of sparse and the returned objects can be a little bit cryptic without a reference.

And as an additional small example, I pulled some Halo Wars 2 data for the game mode 'Rumble'. This is a new mode where players have infinite resources and don't have to worry about their economy. I wanted to see which leader had the highest winrates so I pulled a bunch of matches and graphed their percentages (at the top of this post). It's interesting that the two main story characters, Cutter and Atriox, have the highest winrates.