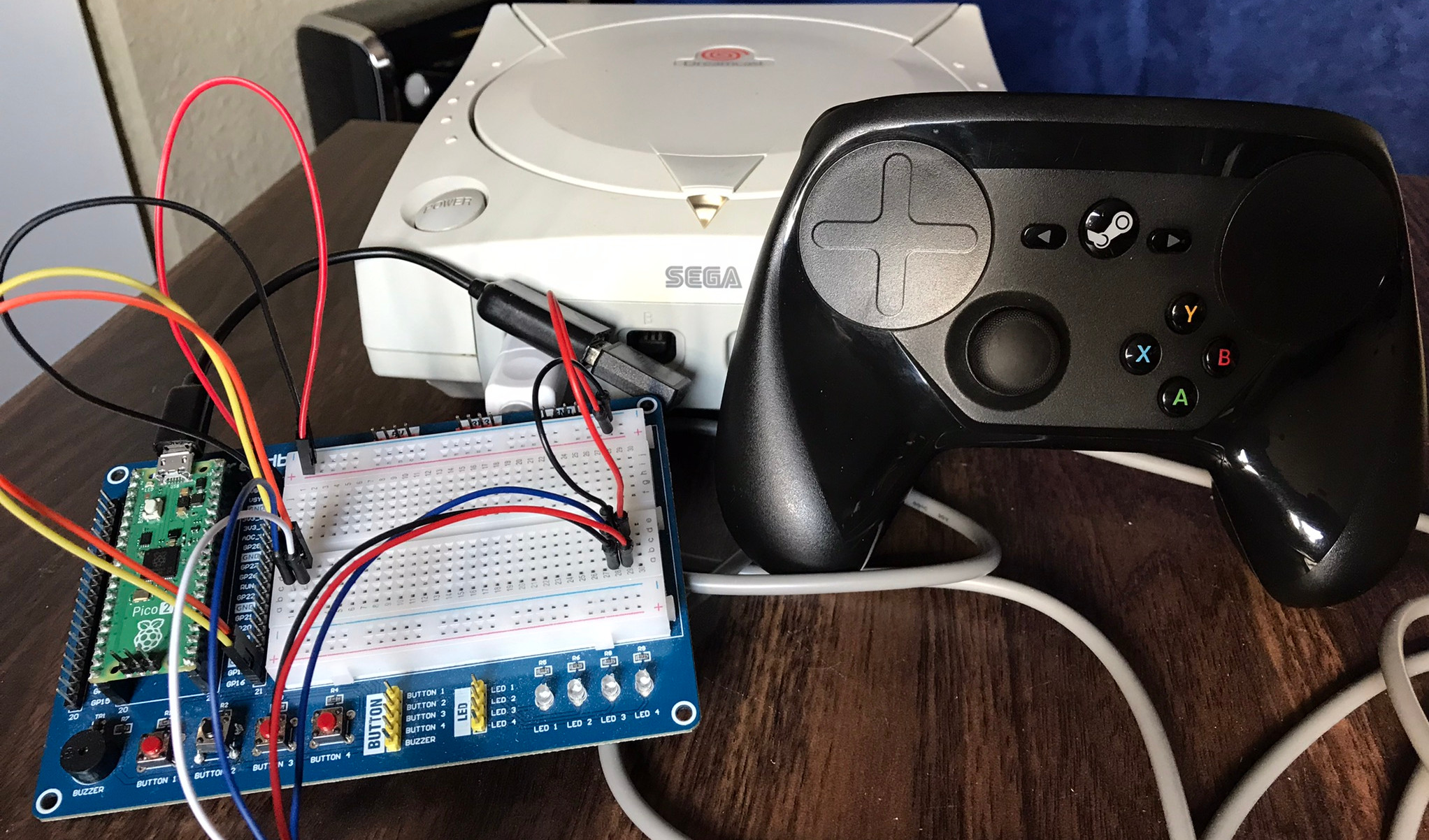

Since getting the Steam Controller working on the Sega Dreamcast, I've been expanding the idea into a general-purpose USB-to-Dreamcast device with an eye on potentially selling it as a product. To that end, I've created a few prototype devices of something I'm calling Pico2Maple.

Pico2Maple is a dongle that plugs into the Dreamcast and allows USB devices to be used on the system in place of original Dreamcast peripherals. Several controllers, keyboards, and even some mice are supported (yes the Dreamcast had an official keyboard and mouse). Additionally, Pico2Maple emulates a VMU - the Dreamcast's memory card - complete with a tiny OLED screen and saving files to a microSD card for easy backups.

So far, I've confirmed the following controllers to work:

- Steam Controller

- 8BitDo SN30 Xbox

- XInput controllers (Xbox 360, One, Series)

- Sony DualSense5

- 8BitDo Wireless Dongle (great for connecting a variety of other controllers wirelessly)

Most USB keyboards should work for games like Typing of the Dead or chatting in Phantasy Star Online. Mouse support does need a bit of improvement but chances are good most will work; it's hard to find enough hardware to test things like this.

Some USB devices will report multiple descriptors (a mouse may report as a mouse and a keyboard) for extra functionality. Pico2Maple will try and use the first valid device it sees and pass that through to the Dreamcast. Unfortunately, it's not possible to report multiple devices through a single Dreamcast controller port.

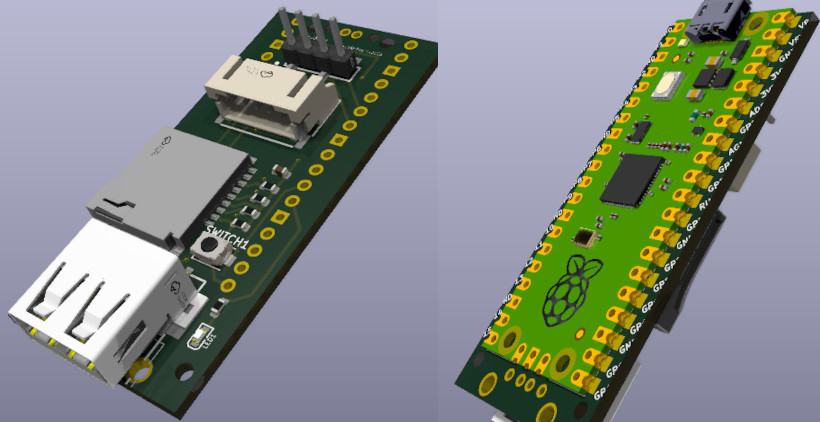

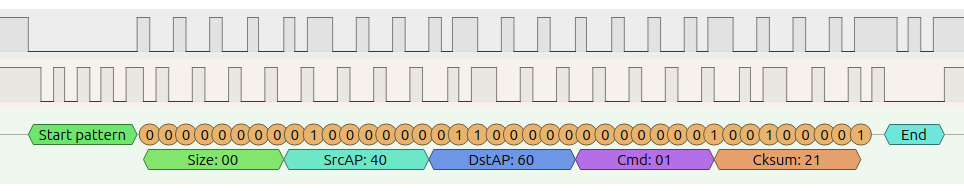

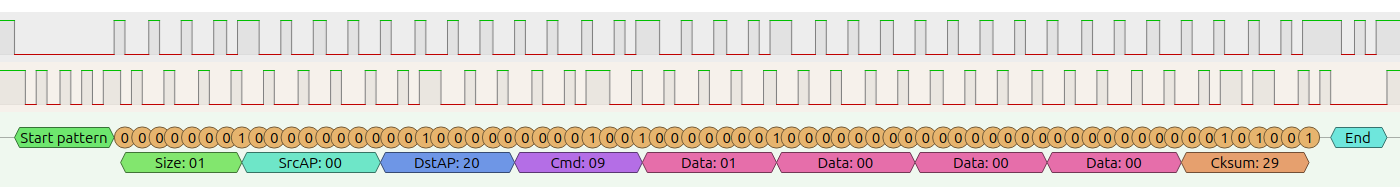

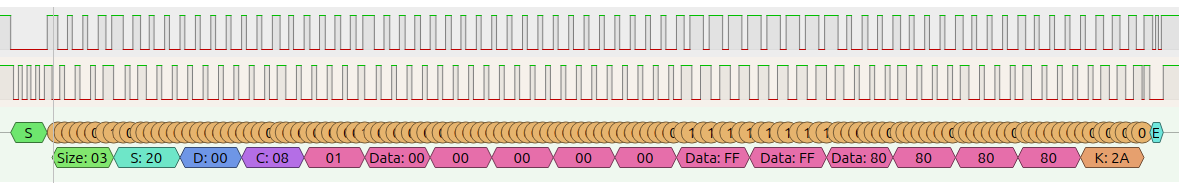

The brains of the device is a Raspberry Pi Pico 2 with my custom firmware and Maple bus implementation. The firmware is not open source at the moment, but the binaries are available on my GitHub if anybody wants to make their own version of the hardware.

When I started working on this project, the RP2350 chip powering the Pico 2 was not readily available, so I designed a custom PCB where the Pico 2 board could be soldered directly to it. Since most of the 'real' electronics are on the Pico board, my PCB is more-or-less just a way to connect all the extra components and peripherals, with a form factor that would work well for a dongle.

Surprisingly, the prototype PCBs I ordered worked great! I must have gotten lucky because there were no functional issues with the boards. Due to the insane shipping times to where I live though, I was moving a bit fast and wasn't completely decided on a final design when I ordered the PCBs. This is why the USB port is facing the wrong direction and meant I had to do a bit of extra manual prep work on the boards. I hope to do a second revision to fix that, and move around a few components for a better overall fit.

In addition to the custom PCB, this project was also my first experience with CAD and 3D printing. I designed the entire enclosure for the dongle in FreeCAD and printed them on a BambuLab P1S. The main body of the dongle is made up of 3 pieces that sandwich the electronics together and four m2 screws, with threaded inserts, extend through the whole dongle and squeeze everything tight.

It took quite a bit of tweaking a test-printing to get the fit I was going for but I think it was worth it in the end. They came out looking almost exactly like what I had imagined! (Wavebird dongle vibes anyone?)

The final dimensions of the dongle are roughly 30mm wide, 25mm deep, and 61mm tall. I would say they are a smidge too wide and are therefore a bit too snug when multiple are plugged in. The microSD card also sticks out about 1-2mm so, even though four can fit in the console by themselves, not all would be able to have SD cards plugged in. Ideally I'd shave off maybe 2mm from the width and have the microSD cards be flush for a better fit.

Other than being a bit too wide, I think the rest of the dimensions are perfect and they look pretty slick when plugged into the Dreamcast.

I mentioned earlier that I hope to produce and sell these to fellow Dreamcast enthusiasts but the current road block is that assembly simply takes way too long to make it worth it. Between soldering the Pico to the main board, wiring up the Dreamcast plug, and final assembly, the whole process takes maybe 45-60 minutes which is too long for me. Plus I don't really like being hunched over a soldering iron for so long.

I could save some time if JLCPCB could presolder the Pico 2 boards for me, or if I could design a PCB using the RP2350 chip directly. But that still leaves wiring up the Dreamcast plug which is what really takes the bulk of the time, trimming wires and soldering the pins. I'd guess it would still take around 30 minutes to put one together which still seems too long.

If anybody has any tips on speeding up manufacturing I'd leave to hear it. I would also be open to potentially licensing the firmware and letting somebody else handle the manufacturing. Either way, feel free to reach out!

Thanks for reading!